Post content & earn content mining yield

placeholder

OmarCrypto

🧵 The difference between Bull Run and Supercycle

Confusion between them is common

And the reason is that many associate the topic only with multiples… which is a mistake.

❌ The difference is not 10x or 100x, and time alone is not enough.

✔️ The real difference lies in the structure of the movement and market behavior.

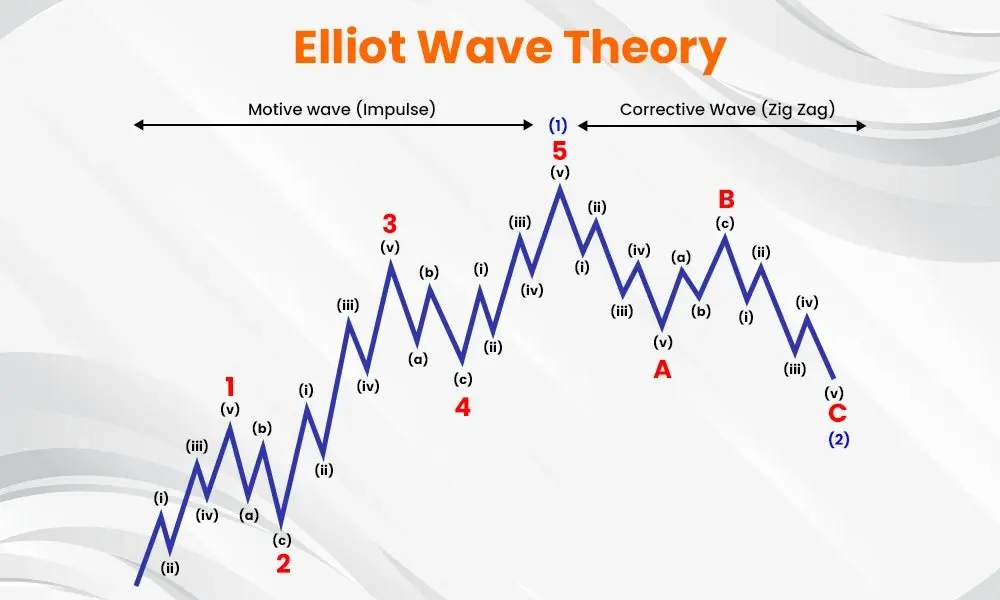

🟢 The Bull Run

▪️ An upward wave within a cycle.

▪️ Can last for weeks or months.

▪️ Occurs with Bitcoin and altcoins.

▪️ It could be wave 3, or C, or a clear uptrend without complexity.

🔵 The Supercycle

▪️ A major cycle lasting years.

▪️ From the bottom of wave 1 to the top o

View OriginalConfusion between them is common

And the reason is that many associate the topic only with multiples… which is a mistake.

❌ The difference is not 10x or 100x, and time alone is not enough.

✔️ The real difference lies in the structure of the movement and market behavior.

🟢 The Bull Run

▪️ An upward wave within a cycle.

▪️ Can last for weeks or months.

▪️ Occurs with Bitcoin and altcoins.

▪️ It could be wave 3, or C, or a clear uptrend without complexity.

🔵 The Supercycle

▪️ A major cycle lasting years.

▪️ From the bottom of wave 1 to the top o

- Reward

- like

- Comment

- Repost

- Share

The Convert Lucky Draw event is officially live. Complete a trade of just $1 to enter the draw—every draw is a winner. You can start a convert trade with as little as $1 and enjoy a fast, zero-fee trading experience. Complete simple tasks to unlock exclusive rewards and start your Convert journey now. https://www.gate.com/campaigns/3741?ref=VQJCU1FWCQ&ref_type=132

- Reward

- like

- Comment

- Repost

- Share

大傻币

大傻币

Created By@CurlyHairIsFine.

Listing Progress

0.00%

MC:

$3.55K

Create My Token

Qinglong Yanyue breaks the sky, Red Hare treads fire and shakes the nine provinces! Zhang Fei's anger moves mountains and rivers, loyalty and righteousness will never fade for a thousand years. I hope those who bought Guan Gong coins won't get chopped up, people in the martial world do martial world things and stay true to their conscience. I first promise not to dump the market, and to build a harmonious Chinese meme community. I hope to create a pure land in this chaotic environment, worthy of the two characters Guan Gong#我看好的中文Meme币

MEME-2,2%

[The user has shared his/her trading data. Go to the App to view more.]

MC:$100.42KHolders:32

100.00%

- Reward

- 1

- 1

- Repost

- Share

InvincibleArbitrageKing :

:

If you don't get rich, who will get rich?Gold rises above 4630 then pulls back, can the bullish trend continue?

Author: Gold Miner Old Cat

Today’s spot gold opened at 4523.05, yesterday’s close was 4509.93, reaching a high of 4630.21, a low of 4513.23, and finally closing at 4596.85, up 1.93%. After a one-hour rally, it slightly retreated and consolidated, with strong bullish momentum.

In the news, expectations of easing global monetary policy, weak economic data from major economies, and geopolitical tensions have driven safe-haven funds into gold. Coupled with a weakening US dollar index, these factors jointly contributed to a stro

View OriginalAuthor: Gold Miner Old Cat

Today’s spot gold opened at 4523.05, yesterday’s close was 4509.93, reaching a high of 4630.21, a low of 4513.23, and finally closing at 4596.85, up 1.93%. After a one-hour rally, it slightly retreated and consolidated, with strong bullish momentum.

In the news, expectations of easing global monetary policy, weak economic data from major economies, and geopolitical tensions have driven safe-haven funds into gold. Coupled with a weakening US dollar index, these factors jointly contributed to a stro

- Reward

- like

- Comment

- Repost

- Share

- Reward

- like

- Comment

- Repost

- Share

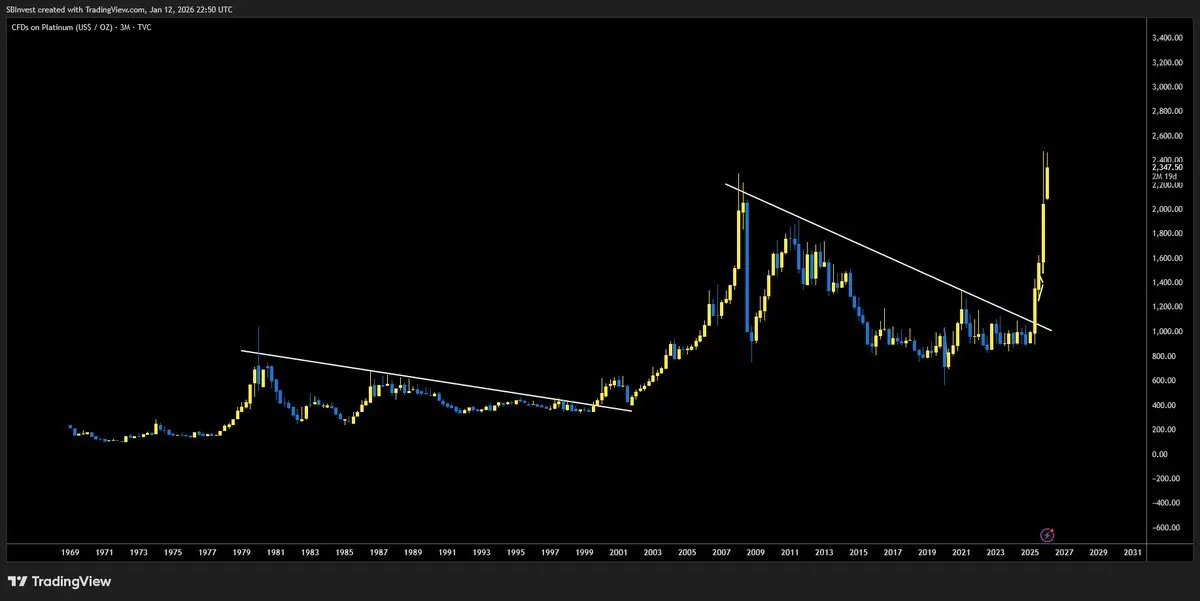

Metals are just bigger shitcoins with more utility

Markets always pump in the same way because people are always the same

Study TA and you can capitalize on volatility

Markets always pump in the same way because people are always the same

Study TA and you can capitalize on volatility

TA6,45%

- Reward

- like

- Comment

- Repost

- Share

Check out Gate and join me in the hottest event! https://www.gate.com/campaigns/3791?ref=VQIRVFPAAG&ref_type=132

- Reward

- 1

- Comment

- Repost

- Share

#Trading Bot#我正在 Gate Use "I'm Coming" /USDT Spot Martingale Bot, total return since creation -8.02%

Overall, "I'm Coming" currently has a high market value and liquidity, but be sure to stay alert to the fading hype in the crypto space and the risk of holding positions. If you need more detailed technical analysis or on-chain data, just let me know!

Overall, "I'm Coming" currently has a high market value and liquidity, but be sure to stay alert to the fading hype in the crypto space and the risk of holding positions. If you need more detailed technical analysis or on-chain data, just let me know!

我踏马来了-16,07%

- Reward

- like

- 1

- Repost

- Share

VirtueCarriesAll. :

:

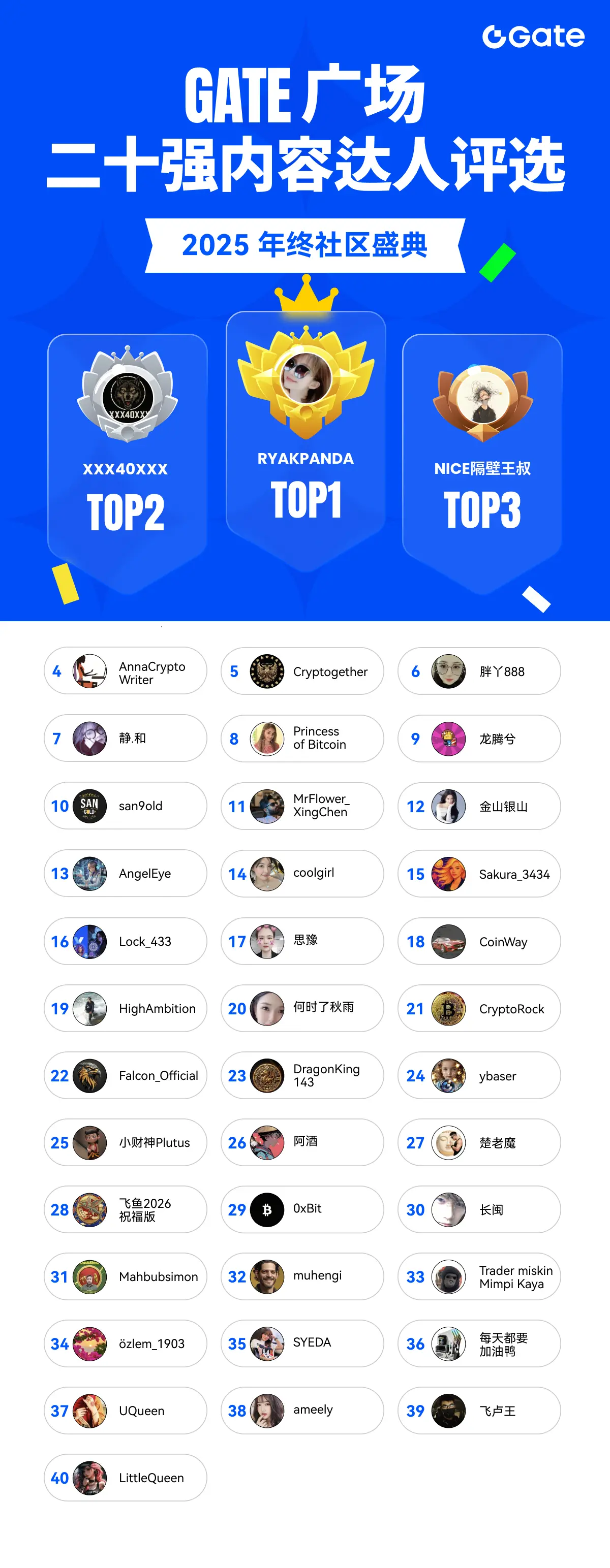

0.027 can't even pass the dead coin, add more positions, roll over, and go empty.#Gate 2025 Year-End Community Gala#

Top Streamers & Content Creators Year-End Awards

Who will be the Top Streamers of the Year? Who will claim the top spot on the Content Creator leaderboard? Join me in voting to support your favorite streamers and creators, and witness the rise of community stars!

https://www.gate.com/activities/community-vote-2025?ref=VQIRVFPDAQ&refType=2&refUid=47059745&ref_type=165&utm_cmp=xjdtmcgP

Top Streamers & Content Creators Year-End Awards

Who will be the Top Streamers of the Year? Who will claim the top spot on the Content Creator leaderboard? Join me in voting to support your favorite streamers and creators, and witness the rise of community stars!

https://www.gate.com/activities/community-vote-2025?ref=VQIRVFPDAQ&refType=2&refUid=47059745&ref_type=165&utm_cmp=xjdtmcgP

- Reward

- like

- Comment

- Repost

- Share

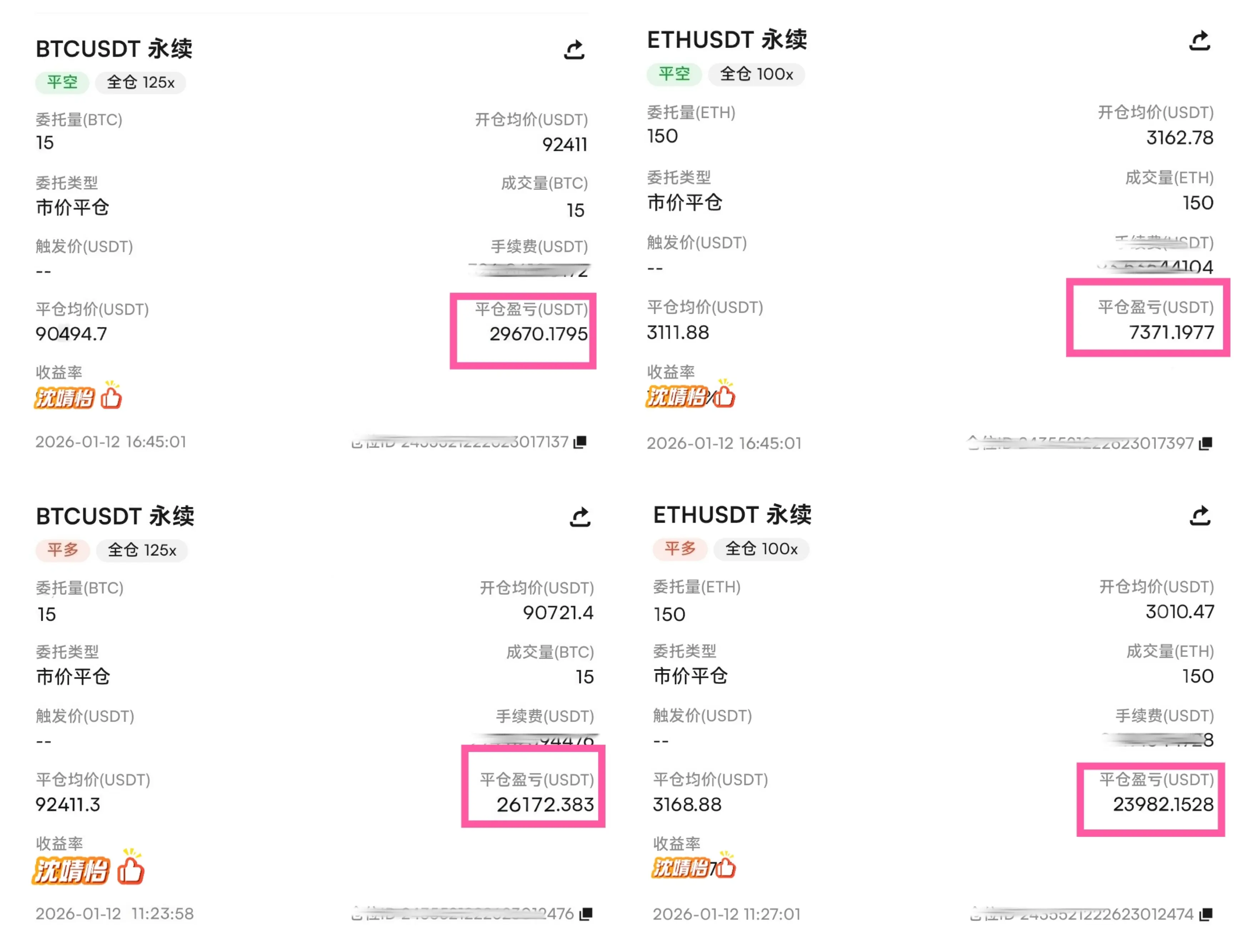

1.13 Morning Jingyi Trading Strategy Analysis

Last night, after the cryptocurrency price accumulated at a low level, it followed the US stock market upward, surged to 92,000, then pulled back, now consolidating around 91,000.

The 4-hour candlestick chart shows alternating bullish and bearish movements with a slight strength. After testing the upper band again, it pulled back. Long upper shadows indicate fierce battles between bulls and bears; the Bollinger Bands are slowly expanding, and the bullish momentum is steadily accumulating, preparing for a potential upward move. On the hourly chart,

View OriginalLast night, after the cryptocurrency price accumulated at a low level, it followed the US stock market upward, surged to 92,000, then pulled back, now consolidating around 91,000.

The 4-hour candlestick chart shows alternating bullish and bearish movements with a slight strength. After testing the upper band again, it pulled back. Long upper shadows indicate fierce battles between bulls and bears; the Bollinger Bands are slowly expanding, and the bullish momentum is steadily accumulating, preparing for a potential upward move. On the hourly chart,

- Reward

- like

- Comment

- Repost

- Share

踏马币

踏马币

Created By@CurlyHairIsFine.

Listing Progress

4.52%

MC:

$4.51K

Create My Token

🇺🇸 WHITE HOUSE ADMIN JUST CONFIRMED TO PASS #BITCOIN AND CRYPTO MARKET STRUCTURE BILL SOON

“CLARITY IS NEAR”

“CLARITY IS NEAR”

BTC0,32%

- Reward

- like

- Comment

- Repost

- Share

🎉 I am honored to be selected among the Top 40 Content Creators at the Gate 2025 Year-End Gala! Vote for me to win prizes like iPhone 17 Pro Max, Mi Band, JD Gift Cards, and more! Click here to vote: https://www.gate.com/activities/community-vote-2025 Thank you for your support—let's rise in the rankings together! ❤️⚘️👍

- Reward

- 3

- 3

- Repost

- Share

HighAmbition :

:

Buy To Earn 💎View More

Gate Square Beginner Posting Guide https://www.gate.com/campaigns/3287?ch=193&ref_type=132

- Reward

- 1

- Comment

- Repost

- Share

Qinglong Yanyue breaks the sky, Red Hare treads fire and shakes the nine provinces! Zhang Fei's anger moves mountains and rivers, loyalty and righteousness will never fade for a thousand years. I hope those who bought Guan Gong coins won't get chopped up, people in the martial world do martial world things and stay true to their conscience. I first promise not to dump the market, and to build a harmonious Chinese meme community. I hope to create a pure land in this chaotic environment, worthy of the two characters Guan Gong#我看好的中文Meme币

MEME-2,2%

[The user has shared his/her trading data. Go to the App to view more.]

MC:$8.38KHolders:3

19.86%

- Reward

- like

- Comment

- Repost

- Share

Load More

Join 40M users in our growing community

⚡️ Join 40M users in the crypto craze discussion

💬 Engage with your favorite top creators

👍 See what interests you

Trending Topics

View More13.03K Popularity

17.77K Popularity

55.72K Popularity

15.14K Popularity

94.71K Popularity

News

View MoreTrump: Any country doing business with Iran will be subject to a 25% tariff by the United States

1 m

X Product Manager: May launch the Smart Asset Tag V1 version within the next month

4 m

Williams expects to see better labor market demand

16 m

Williams: No reason to cut interest rates in the short term, expected GDP growth between 2.5% and 2.75%

29 m

Federal Reserve's Williams expects repurchase operations to be actively used

33 m

Pin